Agentic AI is opening a bold new frontier in artificial intelligence. One that promises to redefine productivity, automate complexity, and unlock unprecedented business value. Unlike its predecessors, this technology doesn't just process information or generate content; it acts. It makes decisions, executes multi-step tasks, and operates autonomously to achieve goals. For executives, the promise is transformative efficiency. For end-users, it's the power to create their own digital assistants with just a few clicks.

But beneath the excitement lies a critical risk. Built on no-code platforms like those in Microsoft 365, agentic AI gives every user the power to create and deploy autonomous agents, without IT oversight. The result? A digital Wild West of “shadow agents” racking up costs, exposing data, and creating a sprawling, ungovernable ecosystem. What begins as a promise of efficiency can quickly spiral into chaos.

This article is your guide through this new landscape. We will demystify what agentic AI is, explore its powerful use cases within the Microsoft ecosystem, and, most importantly, provide a clear, actionable framework for governing it.

What is agentic AI? A definition

At its core, agentic AI refers to autonomous AI systems capable of perceiving their environment, making independent decisions, and acting toward specific, often complex, goals with minimal human oversight. Think of it as moving from an AI that can answer a question to an AI that can act on the answer.

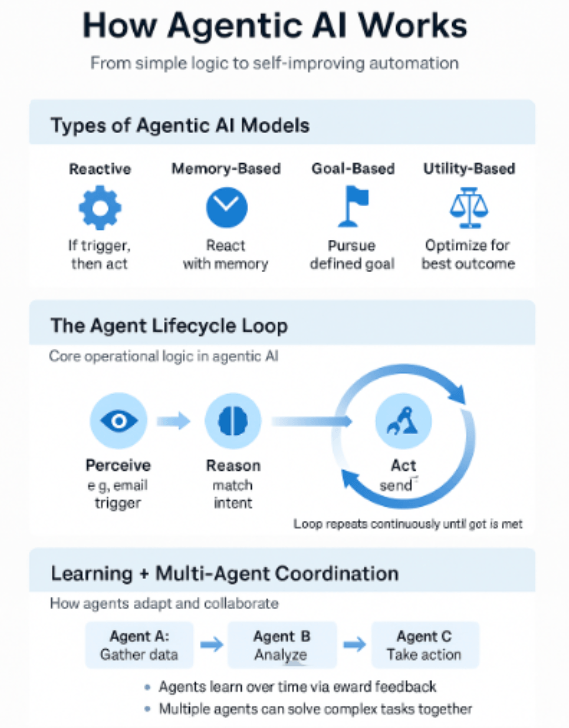

Instead of a simple input-output model, an AI agent operates in a continuous loop:

- It perceives its digital environment using technologies like natural language processing (e.g., interpreting user prompts, new emails, or database updates).

- It reasons and makes decisions based on its goals and programming.

- It acts upon the environment to move closer to its goal (e.g., schedules a meeting, updates a CRM record, orders supplies).

This ability to act autonomously is what separates it from other forms of AI and unlocks its immense potential. As we'll see, this is a critical distinction that is shaping the new era of modern work and requires a new approach to management and oversight.

Types of agentic AI models and how they work

Agentic AI systems vary in complexity, from specific task responders to intelligent, goal-driven agents. Understanding their foundational models is crucial to governing them effectively across platforms such as Microsoft Copilot Studio, ServiceNow, and OpenAI frameworks.

- Reactive agents respond directly to current inputs, with no memory or long-term planning. Ideal for rule-based automations like sending alerts or classifying requests.

- Memory-based agents use past interactions or stored data to inform decisions. This allows for adaptive behaviors based on user history or system state.

- Goal-based agents reason about future outcomes and take actions that move toward a specific objective, such as completing a workflow or resolving a support case.

- Utility-based agents weigh trade-offs and choose actions that maximize a predefined value, such as efficiency, speed, or customer satisfaction.

Together, these models represent a spectrum, from rule-based logic to autonomous execution, enabling AI agents to carry out both simple and complex tasks with minimal human intervention.

Learning and coordination: Enabling autonomy at scale

Agentic systems are built on sophisticated models that enable their autonomy. Key concepts include:

- Reinforcement learning and autonomy: Many AI agents learn through trial and error, a process called reinforcement learning. They perform an action, observe the outcome, and receive a "reward" or "penalty." Over time, this allows them to learn the optimal sequence of actions to achieve a goal, even in unfamiliar situations.

- Multi-agent coordination: In advanced scenarios, multiple agents can collaborate to solve problems that are too complex for a single agent. For example, one agent might be responsible for gathering data, another for analyzing it, and a third for executing the resulting plan. This is where the true power of large-scale automation emerges: automating complex tasks across distributed systems.

Agentic AI vs generative AI: Understanding the key difference

The terms are often used interchangeably, but they represent distinct capabilities. The "agentic ai vs generative ai" debate is crucial for understanding the technology's impact.

Generative AI, popularized by tools like ChatGPT and GitHub Copilot, is focused on creating new content. It takes a prompt and generates text, images, code, or audio. It is a powerful content creation engine.

Agentic AI, on the other hand, is an action-oriented execution engine. It may use generative AI to understand a request or formulate a plan. But its primary purpose is to perform tasks and interact with other systems. In enterprise environments, platforms like Microsoft Copilot Studio, ServiceNow Virtual Agent, or Salesforce Einstein bots bring this concept to life. Frameworks like AutoGPT and emerging agentic AI OpenAI models demonstrate its potential for broader autonomous orchestration.

Here’s a simple breakdown:

| Feature | Generative AI | Agentic AI |

| Primary function | Content creation (text, images, code) | Task execution, automating repetitive tasks, and achieving specific goals |

| Core capability | Responds to prompts, generates outputs. | Perceives, reasons, and acts autonomously. |

| Interaction model | Primarily conversational, one-off requests. | Continuous, goal-oriented operation. |

| Example | "Write an email to my team about the Q3 results." | "Monitor my inbox for Q3 performance reports from finance, summarize the key findings, draft an email to my team with the summary, and schedule a follow-up meeting for next Tuesday." |

| Example tools | ChatGPT, GitHub Copilot, Claude | Microsoft Copilot Studio agents, Salesforce Einstein bots, ServiceNow Virtual Agent |

| Primary risk | Misinformation, accuracy, and intellectual property. | Unintended actions, cost overruns, data breaches, system sprawl. |

Bridging the two: Microsoft 365 Copilot + Copilot Studio

A perfect example of this evolution is Microsoft’s own platform. Microsoft 365 Copilot, on its own, is largely generative. It helps users write emails, summarize documents, or create slides. But when combined with Copilot Studio, users can build autonomous agents that monitor triggers, execute logic, and integrate across Microsoft 365 and beyond. This pairing transforms Copilot from a content assistant into an agentic AI platform.

The hidden risks: When citizen-led AI becomes a liability

While many will focus on the utopian vision of efficiency, few acknowledge the risks of implementing agentic AI without governance. The central problem is that these agents are often not being developed in a controlled, centralized IT process. They are being created by business users on platforms like Microsoft Copilot Studio and the Power Platform.

This democratization of development, while powerful, leaves IT with neither authority nor control over the actions, data access, and costs of these agents. This creates three critical pain points.

Pain Point 1: The astronomical and unpredictable cost

Challenge: How much are these agents costing the company?

The promise that agents will save you money is only true if their costs are controlled. Because agents can interact with paid services and APIs (like Azure Cognitive Services or third-party connectors), a misconfiguration can be catastrophic.

We've spoken to an organization where a single, poorly configured agent created by an end-user accidentally entered an infinite loop. Within one hour, it had racked up over $19,000 in API consumption costs. This wasn't malicious. It was a simple mistake born from a lack of user onboarding, guardrails, and central monitoring. Without visibility, every agent is a potential budget time bomb.

Pain Point 2: The silent data breach

Challenge: Who has access to these agents, and what data can the agents access?

This is perhaps the most severe risk. An AI agent typically runs with the permissions of its creator. Consider the implications:

- An agent built by a senior sales executive could potentially access every sensitive customer record, sales forecast, and contract in their OneDrive and SharePoint sites.

- An agent created by a member of the HR department could have access to confidential employee PII, salary information, and performance reviews.

Now, imagine that agent is poorly configured or, worse, overshared with a wide group of users. The agent itself becomes a backdoor, giving users indirect access to data they should never see. It’s a compliance officer's worst nightmare, creating massive security and GDPR risks that are almost impossible to track without the right tools.

Pain Point 3: Sprawl and the rise of "shadow agents"

Challenge: How many agents are even running in our company?

Just as "shadow IT" became a major headache with the rise of SaaS apps, we are now entering the era of "shadow AI." When any of the thousands of users in your organization can create an autonomous agent, you quickly lose track.

- How many agents exist?

- Are they duplicates of each other?

- Are they still needed, or are they "orphaned" after an employee leaves the company?

- What business processes now depend on these unmanaged, undocumented agents?

This sprawl makes the environment fragile, insecure, and impossible to manage, let alone optimize.

Agentic AI in the Microsoft 365 ecosystem

The Microsoft cloud is a fertile ground for the growth of agentic AI use cases. With integrated tools and a vast data landscape, the use cases are compelling, but so are the governance challenges. This is where agentic AI within Microsoft becomes a very real and immediate topic for IT leaders.

Use case 1: Workflow automation with Copilot agents

Users can leverage Microsoft Copilot Studio to build custom agents that automate complex business processes. For example, an agent could manage the entire procurement process: receive a request in Teams, find the best supplier via a connector, generate a purchase order, send it for approval, and update the finance system upon payment. While incredibly powerful, building Copilot agents without control opens the door to all the risks we've discussed. This ranges from cost overruns with premium connectors to process failures from poorly designed logic.

Use case 2: Knowledge agents in Teams and SharePoint

Imagine an agent that lives within a specific Teams channel. Its goal is to be the subject matter expert for that project. It can answer questions by finding, reading, and summarizing documents from the connected SharePoint site. This is one of the most popular agentic AI examples. However, its output is only as good as the data it's trained on. If your SharePoint is cluttered with stale, duplicate, or trivial content, the agent will confidently provide misinformation, leading to poor business decisions.

Use case 3: Security and compliance automation

Ironically, agentic AI can also be a tool for governance. An IT department could build an agent to monitor for over-shared files and automatically initiate an access review with the file owner. This is a fantastic use case, but it highlights the need for a central platform to manage even these "official" agents. You need to ensure they are functioning correctly, track their actions for audit purposes, and manage their lifecycle.

The market for these agentic AI solutions is expanding rapidly. While Microsoft is a key player, we see similar trends with agentic AI from Google, OpenAI, and ServiceNow, all aiming to embed autonomous capabilities deep within their ecosystems.

Governance: The critical enabler for responsible AI

Faced with these risks, the spontaneous reaction might be to lock everything down. But that would stifle the very innovation you’re trying to foster. The answer isn't to block agentic AI. It's to govern it.

Effective AI governance provides the guardrails to manage growing AI capabilities and allow users to innovate safely. It transforms AI from a source of risk into a scalable, secure, and cost-effective asset. This requires a shift in mindset: governance must be established before mass rollout, not as an afterthought. A comprehensive approach to governing Copilot and agentic AI is the only way to balance empowerment with control.

A 5-step Framework for responsible implementation

To avoid the pitfalls of uncontrolled AI, organizations need a structured approach. Here are the best practices companies must consider.

1. Inventorize and track: Achieve full visibility

The first step is to get a complete, real-time inventory of every single AI agent across your Microsoft 365 tenant, including Copilot and the Power Platform. You need to know:

- Who created the agent?

- When was it created and last modified?

- Is the owner still with the company?

2. Monitor: Understand what your agents are doing

Once you have an inventory, you need to understand their behavior. This means monitoring their interactions and connections.

- What data sources are they accessing (SharePoint, Exchange, Dataverse)?

- What connectors are they using (standard, premium, custom)?

- What actions are they performing?

3. Manage usage: Define and enforce policies

With visibility and understanding, you can now set rules.

- Usage policies: Who is allowed to create agents? Are there different rules for different departments?

- Access reviews: Automatically trigger reviews for agents that access sensitive data or are shared widely.

- Lifecycle management: Define policies and resolution processes to identify and handle inactive, orphaned, or non-compliant agents. Prevent sprawl before it grows out of control.

4. Control costs: Prevent budget blowouts

Next, you must get a handle on the financial impact.

- Cost monitoring: Understand the costs agents incur, especially from premium connectors and Azure services.

- Limits and alerts: Define cost limits and set automated alerts to prevent excessive use or infinite loops.

- Chargebacks: Implement dashboards to attribute usage costs back to specific departments, fostering accountability.

5. Choose the right tools: Enable governance at scale

Putting this framework into practice at scale requires more than manual effort. To govern agentic AI effectively across a large organization, you need dedicated tools that provide automation, visibility, and control. When selecting a governance platform, consider the following criteria:

- Microsoft 365 compatibility: Ensure the platform supports Power Platform, Copilot Studio, SharePoint, Teams, and OneDrive natively.

- Comprehensive visibility: Choose tools that inventory agents, track usage and surface data access and sharing behavior.

- Automated policy enforcement: Look for solutions that monitor compliance, enforce rules, and manage agent lifecycles automatically.

- Support for innovation: Governance should provide guardrails without limiting how teams experiment or deploy agents.

- Cross-platform integration: Select platforms that govern both Microsoft and third-party services through APIs and external connectors.

With the right governance platform in place, organizations can safely and efficiently scale agentic AI. Solutions like Rencore Governance support this by automating critical governance tasks, reducing risk, and helping teams move faster without creating IT bottlenecks.

For a practical guide to implementing policies and processes around Copilot and agentic AI, download the whitepaper Regulating your AI companion: Best practices for Microsoft Copilot governance.

Conclusion: Charting the future with governed AI agents

Agentic AI is no longer experimental or abstract. It's here now, and it's already running in your tenant. It holds the potential to deliver on the long-held promise of true digital transformation and hyper-automation.

However, the common misconception that these agents will magically make your company more efficient and save you money is dangerously incomplete. That outcome is only possible when this powerful technology is deployed responsibly, with a robust governance framework in place from day one.

By embracing a strategy of proactive governance—one built on visibility, policy, and automation—you can unlock the immense benefits of agentic AI while systematically mitigating the risks. You can empower your users to innovate, your processes to become more intelligent, and your business to lead the way, all with the confidence that you remain firmly in control.

Discover now how Rencore helps you control Copilot, Power Platform, and custom agents. Try out Rencore Governance for free for 30 days!

Frequently asked questions (FAQs)

What makes agentic AI different from traditional AI systems?

The key difference is autonomy and action. Traditional AI systems are primarily analytical tools that process data and provide insights or predictions. Agentic AI goes a step further. It uses those insights to make decisions and take actions in the digital world to achieve a specific goal, often without direct human command for each step.

What is the difference between generative AI and agentic AI?

Generative AI creates content such as text, images, or code based on prompts. Agentic AI, by contrast, is designed to take action. It perceives, reasons, and executes tasks autonomously to achieve goals.

How can organizations ensure agentic AI systems remain secure and compliant?

Security and compliance hinge on governance. Organizations must implement a framework that includes:

- Complete inventory: Continuously discovering all AI-powered agents in the environment.

- Continuous monitoring: Tracking what data agents access and what actions they perform.

- Policy enforcement: Setting and automating rules for who can create agents, what connectors they can use, and how they can be shared.

- Lifecycle management: Automatically handling inactive, orphaned, or non-compliant agents.

Tools like Rencore Governance are essential for automating this process at scale.

How does agentic AI integrate with Microsoft 365 and Power Platform?

Agentic AI integrates deeply into the Microsoft ecosystem through tools like Microsoft Copilot Studio and Power Automate. Users can build agents that connect to and act upon data across the entire M365 suite, including SharePoint, Teams, OneDrive, Exchange, and Dataverse.

These agents leverage Microsoft's underlying AI infrastructure and connectors to automate workflows and interact with both internal Microsoft services and external third-party applications.

Is agentic AI the next big thing?

Agentic AI is emerging as a major shift in how automation works, moving from passive assistance to autonomous execution. While still early in adoption, it is quickly becoming a priority for enterprises using platforms like Microsoft 365, where the ability to act independently is reshaping productivity, workflows, and governance needs.