With the rise of Microsoft 365 Copilot, Power Automate, and Microsoft Loop, a new class of powerful AI agents is operating across your most critical business data and workflows. This integration brings incredible potential for productivity, but it also surfaces every weakness in your governance.

The game has changed. AI governance has shifted from a theoretical discussion in boardrooms to an immediate operational and regulatory necessity. The introduction of the EU AI Act, which became law in June 2024, establishes legally enforceable obligations for any company operating in Europe. Suddenly, the lack of control over these new AI agents isn't just a security risk. It's a significant compliance liability.

The problem? The native tools within Microsoft 365 don't provide the comprehensive control layer you need to meet these new demands. This article will break down the real, unsolved challenges of governing AI within the Microsoft ecosystem and explain how to build a robust and comprehensive AI governance framework that protects your organization.

What is AI governance?

At its core, AI governance is a comprehensive framework of rules, policies, standards, and processes that guide responsible AI development and governance practices. Organizations put these measures in place to ensure their AI technologies are used responsibly, securely, in compliance with legal regulations, and with clear ethical considerations built into every decision.

At the end of the day, AI governance is less about tools and more about collective responsibility. It underpins responsible AI and AI ethics by forcing organizations to consider questions such as:

- Who is responsible for the decisions made by an AI?

- What data is the AI allowed to access and learn from?

- How do we ensure AI outputs are accurate, fair, and unbiased?

- How do we track and audit AI actions to prove compliance?

Why is AI governance important now?

For years, organizations have struggled with cloud governance: managing the sprawl of sites, teams, and apps. The introduction of embedded AI agents has amplified this challenge tenfold. These agents act on the data within your existing environment, meaning any pre-existing governance gaps are now critical vulnerabilities.

With the EU Artificial Intelligence Act in force, these gaps could translate directly into compliance failures and regulatory penalties. The regulation underscores the urgent need for responsible AI governance and clear AI governance policies to mitigate legal risks. What was once an internal IT concern has become a board-level obligation that business leaders must now prioritize.

Your primary concerns are likely centered on four key areas:

1. Information discovery risks

Sensitive files like M&A documents, HR salary data, or confidential project plans often remain accessible in messy SharePoint environments. Copilot’s powerful semantic search exposes them instantly, even if they were previously “hidden” by obscurity. Without clear data ownership and architecture, AI becomes a high-speed data leak machine.

2. Content accuracy risks

AI is only as good as the data it's trained on, and poor model development makes that even more evident. This principle, well known in data science, applies directly to Copilot. If Copilot accesses documents that are outdated, duplicated, or factually incorrect (the "garbage in, garbage out" principle), it will generate misleading summaries, inaccurate reports, and "hallucinated" content. When AI algorithms are based on weak data integrity, the risks multiply. Misinformation can lead to poor business decisions and erode trust in the technology.

3. Security and GDPR risks

How can you ensure that an AI agent, when prompted by a user, doesn't inadvertently process or share data in a way that would breach GDPR? Protecting intellectual property and ensuring data security and data protection standards are upheld is paramount. But it’s incredibly difficult without visibility into AI activities.

4. Cost justification and ROI

Copilot licenses and the underlying infrastructure represent a significant investment. Without clear metrics on adoption, usage, and impact, how can you justify the cost? Proving the ROI of AI requires a governance strategy that includes robust monitoring and reporting with clear governance metrics.

The governance gap in modern AI tools

The most significant shift is that AI is no longer a separate application you log into. It's woven into the fabric of your collaboration suite.

Think about how your teams work. They use Microsoft Teams for communication, SharePoint for document storage, and Power Automate to create workflows. Now, AI is present in all of them - and with it come new governance gaps and risks:

- Microsoft 365 Copilot: Acts as a research assistant, content creator, and meeting summarizer, with access to emails, chats, calendars, and documents. The risk? It can instantly surface overshared or poorly secured data that was previously hidden by obscurity.

- Copilot Studio: Enables users beyond professional AI developers to build custom Copilot agents for specific processes or knowledge domains. While this democratizes AI development, it also creates risks of agent sprawl, unmanaged connectors, and “shadow AI” operating outside IT’s visibility.

- Power Platform: Allows employees (even without coding skills) to build AI-powered workflows and apps that connect to hundreds of internal and external services. This flexibility drives innovation but can introduce unapproved data flows and compliance gaps.

- Microsoft Loop: Provides collaborative workspaces where AI can co-create and organize content in real time. Without proper lifecycle and access controls, Loop can become another channel where sensitive information is duplicated, overshared, or poorly governed.

In practice, these tools operate as autonomous agents acting directly on your corporate data. That power brings real exposure. Without implementing AI governance, organizations risk losing control of how AI technology interacts with sensitive information. Within Microsoft 365, the governance gaps typically surface in three areas:

1. Access to unstructured, overshared data

The biggest immediate threat of Copilot is its ability to instantly surface overshared or poorly secured data. Decades of "permission sprawl" and unstructured data storage mean that sensitive information is often accessible to a much wider audience than intended. Copilot bypasses the obscurity that once protected this data, making robust access governance non-negotiable.

2. Hallucinated content and flawed decisions

Your Microsoft 365 tenant is likely filled with stale, orphaned, and duplicated content. When an AI agent uses this poor data quality as its "source of truth," it generates flawed outputs. A Power Automate flow might trigger an incorrect action based on outdated data, or Copilot might confidently present a summary based on a draft version of a report.

3. Uncontrolled agent behaviors and "shadow AI"

The citizen developer revolution, powered by the Power Platform, means employees can create their own AI agents and workflows. Without proper oversight, "shadow AI" emerges in the form of unauthorized apps and automations. These can connect to external services, process data outside compliance boundaries, and create dependencies that IT is completely unaware of.

The EU AI Act: Governance is now the law

The conversation around AI governance was once led by think tanks and industry leaders like IBM. Now, it's a binding legal framework. The European Parliament passed the EU AI Act in June 2024, a landmark regulation that shapes the debate on global AI governance. It has been in force since August 1, 2024, with its requirements becoming applicable in stages over the coming years. If you do business in or with the European Union, you are affected.

Rollout timeline at a glance:

- August 2024:

Act enters into force - February 2025:

First provisions apply (e.g., bans on unacceptable-risk systems) - August 2025:

Main obligations for high-risk AI and general-purpose AI (GPAI) begin - August 2026:

Broader enforcement, including GPAI transparency and compliance requirements - August 2027:

Final transitional deadlines; full compliance across the EU

These milestones mark a new era of AI compliance across Europe and define the foundation for AI regulations worldwide. It reinforces fundamental protections such as data privacy and human rights, ensuring AI aligns with broader societal values and is guided by principles of ethical development.

A straightforward breakdown of the regulation

The EU AI Act takes a risk-based approach, meaning the level of regulation depends on the potential harm an AI system could cause.

Who it affects: Any organization that develops, deploys, or uses AI systems within the EU market. Using M365 Copilot within your European operations places you firmly in the "user" category.

How it classifies risk: The act creates tiers of risk, from "unacceptable" (which are banned) to "high-risk" (subject to strict requirements), down to "limited" and "minimal" risk. General-purpose AI models (like the ones powering Copilot) have specific transparency obligations.

Key requirements for businesses: Even for limited-risk systems, the Act defines clear AI governance aims that will become the standard for due diligence. These include:

- Transparency: Users must be aware that they are interacting with an AI system. You must be able to explain how the AI operates and makes decisions.

- Traceability: You need robust logging and audit trails to track the data inputs, processing steps, and outputs, so you can explain how AI systems operate in practice.

- Human oversight: There must be AI oversight mechanisms in place for humans to ensure that AI systems can be reviewed, challenged, or overridden, especially in critical processes.

Why Microsoft-native tooling isn't enough

Microsoft provides a powerful platform, but the ultimate responsibility for compliance rests with you, the data controller. While Microsoft Purview offers excellent tools for data classification and retention (data governance), it doesn't address the full scope of AI governance.

Native tools often lack:

- Centralized visibility: There is no single dashboard to see every Power Automate flow, every Copilot usage pattern, and every external connection across your entire tenant.

- Contextual control: You can label a document as "confidential," but can you prevent a "citizen developer" from building a Power App that connects that document's library to an unapproved third-party service?

- AI Lifecycle management: How do you find and decommission thousands of orphaned or unused AI-powered workflows that are consuming resources and creating potential security backdoors?

Taken together, these gaps show the limits of relying on data governance alone. Microsoft Purview helps classify and protect information, the what. But to govern AI effectively, you also need to control the how and where: which agents are running, what connectors they use, and how services interact with sensitive data. That’s the role of service governance. By combining data governance and service governance, organizations can close the gap and achieve true AI governance.

|

Aspect |

Data governance |

Service governance |

|

Focus |

What information is stored and how it’s classified |

How information is accessed, processed, and shared across services and agents |

|

Scope |

Documents, emails, chats, and their metadata |

AI agents, apps, workflows, connectors, and permissions |

|

Key questions |

Is this file sensitive? Should it be labeled or retained? |

Who is running which agent? What services and connectors are in use? Is usage compliant? |

|

Strengths |

Sensitivity labeling, data retention, and classification policies |

Visibility into AI agents, shadow AI detection, lifecycle, and policy control |

|

Limitations |

Does not cover agent behavior, external connectors, or automation risks |

Complements data governance by adding context and control |

|

Tool examples |

Microsoft Purview |

Rencore Governance |

The 4 pillars of effective AI governance

AI governance requires both sides of the equation: data governance to classify and protect information, and service governance to control how that information is used by agents, apps, and workflows. Together, they provide the foundation for safe and compliant AI adoption and help organizations address emerging challenges in a fast-changing AI landscape.

Building on this foundation, a practical AI governance strategy can be organized into four essential pillars:

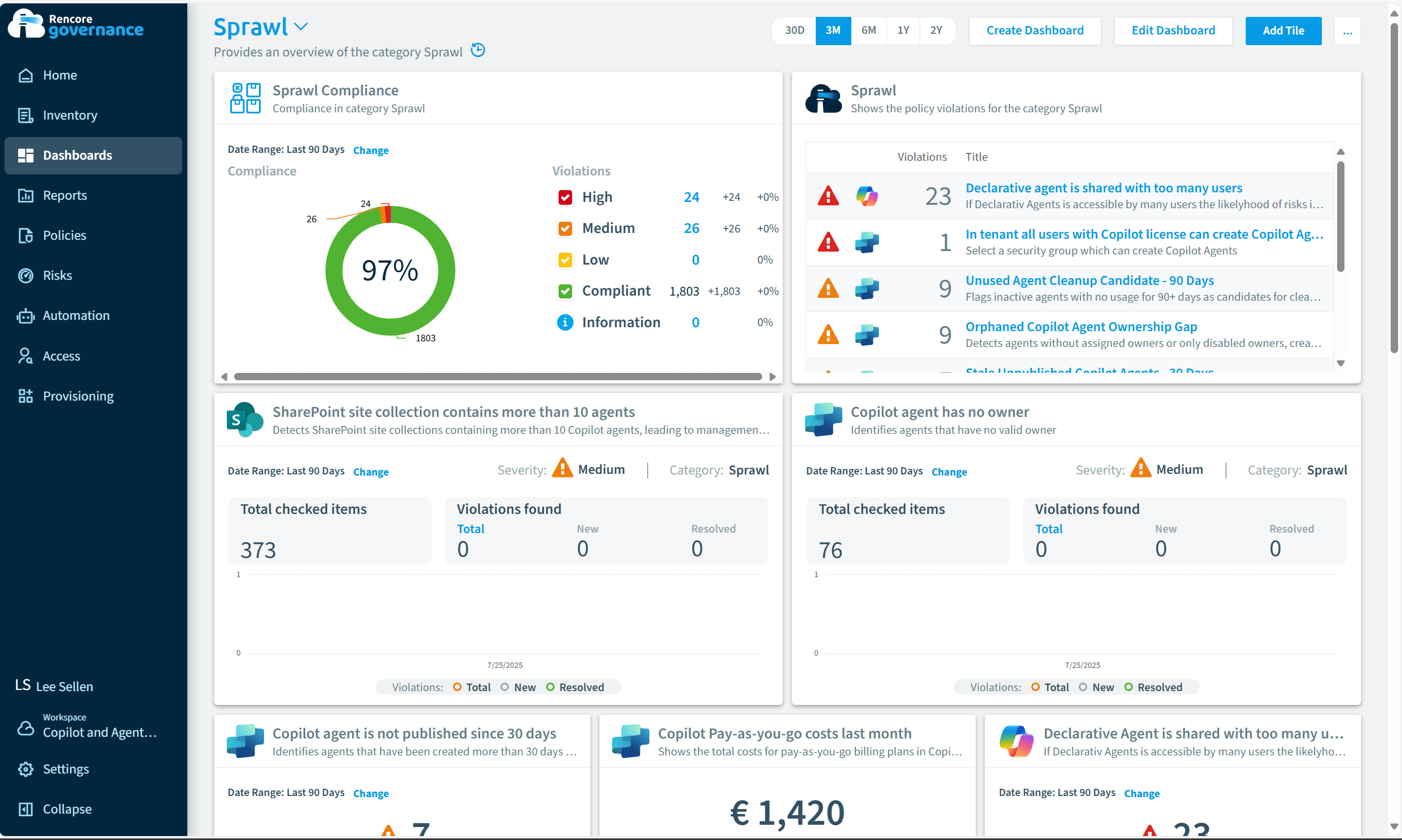

1. Visibility: Know your AI landscape

Effective governance starts with clarity. Organizations need a comprehensive view of every AI-powered asset across their environment before they can manage risk or enforce policies. This is the first step in implementing AI governance practices that align with compliance and ethical standards.

The challenge: AI agents are everywhere. Who is using Copilot and for what? Which departments have built Power Apps with AI Builder? Are there unmanaged flows connecting to external data sources? This sprawl creates a massive blind spot for IT and compliance teams.

The solution (service governance): This is where Rencore's "Service Governance" approach comes in. While Purview handles data governance (the what), Rencore governs the services and containers around it (the how and where). We provide a single pane of glass to:

- Inventory all AI agents: Automatically discover and catalog every Copilot instance, Power App, Power Automate flow, and other AI-enabled resources.

- Detect shadow AI: Identify unauthorized or unmanaged agents and connectors that pose a security or compliance risk.

- Map dependencies: Understand how different AI agents connect to each other and to your critical data sources.

2. Access and policy control: Enforce the rules of the road

Once you have visibility, you must enforce AI governance and embed safeguards directly into your AI processes to ensure AI is used safely and appropriately.

The challenge: How do you prevent Copilot from accessing overshared sensitive data? How do you stop users from connecting Power Automate to non-compliant third-party applications like a personal Dropbox? Manually managing these permissions at scale is impossible.

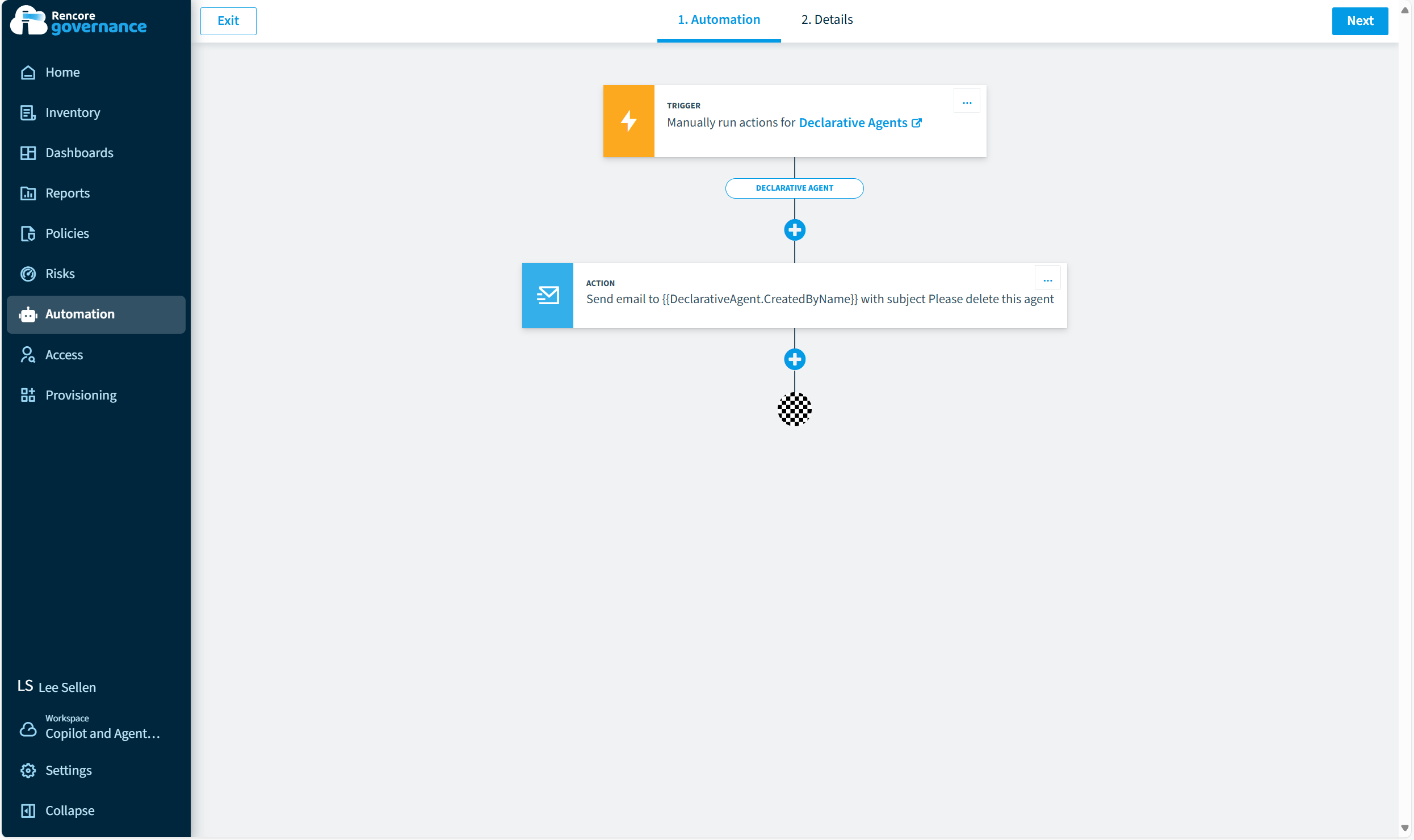

The solution (automation): Effective AI governance tools rely on automation. Rencore allows you to:

- Automate policy enforcement: Create rules that automatically detect and remediate risks. For example, automatically disable a Power Automate flow if it uses an unapproved connector or flag a SharePoint site with inconsistent permissions for review before enabling Copilot access.

- Govern connectors: Control connectors by defining which third-party integrations are approved and which are blocked, reducing the risk of data exfiltration.

- Automate access reviews: Implement automated, recurring reviews of who has access to the data and services that AI agents use, reducing the risk of permission creep.

3. Auditability and logging: Create an unbreakable chain of evidence

To comply with the EU AI Act and pass internal audits, you need a complete, tamper-proof record of all AI activity.

The challenge: Native logging is often fragmented across different admin centers and may not capture the specific details needed for a compliance audit.

The solution (centralized auditing): Rencore creates a centralized record of all important governance actions. You can:

- Track the full lifecycle: Log every event from the creation of an AI agent to its modification and eventual deletion.

- Document policy violations: Maintain a clear record of every policy violation and the automated remediation step that was taken.

- Accelerate audit readiness: When auditors ask for proof of control over your AI systems, you can generate comprehensive reports in minutes, not weeks. While a formal AI governance certification is still an emerging concept, this level of audit-readiness is the closest you can get to demonstrating compliance.

4. Compliance and risk management: Align with regulations

Your AI governance framework must be explicitly designed to meet external regulations like GDPR and the EU AI Act, as well as your own internal security policies.

The challenge: Manually mapping hundreds of technical controls to the legal requirements of a regulation is a complex, time-consuming, and error-prone task. Organizations need a way to translate regulations like the EU AI Act and GDPR into actionable governance policies without overwhelming IT and compliance teams.

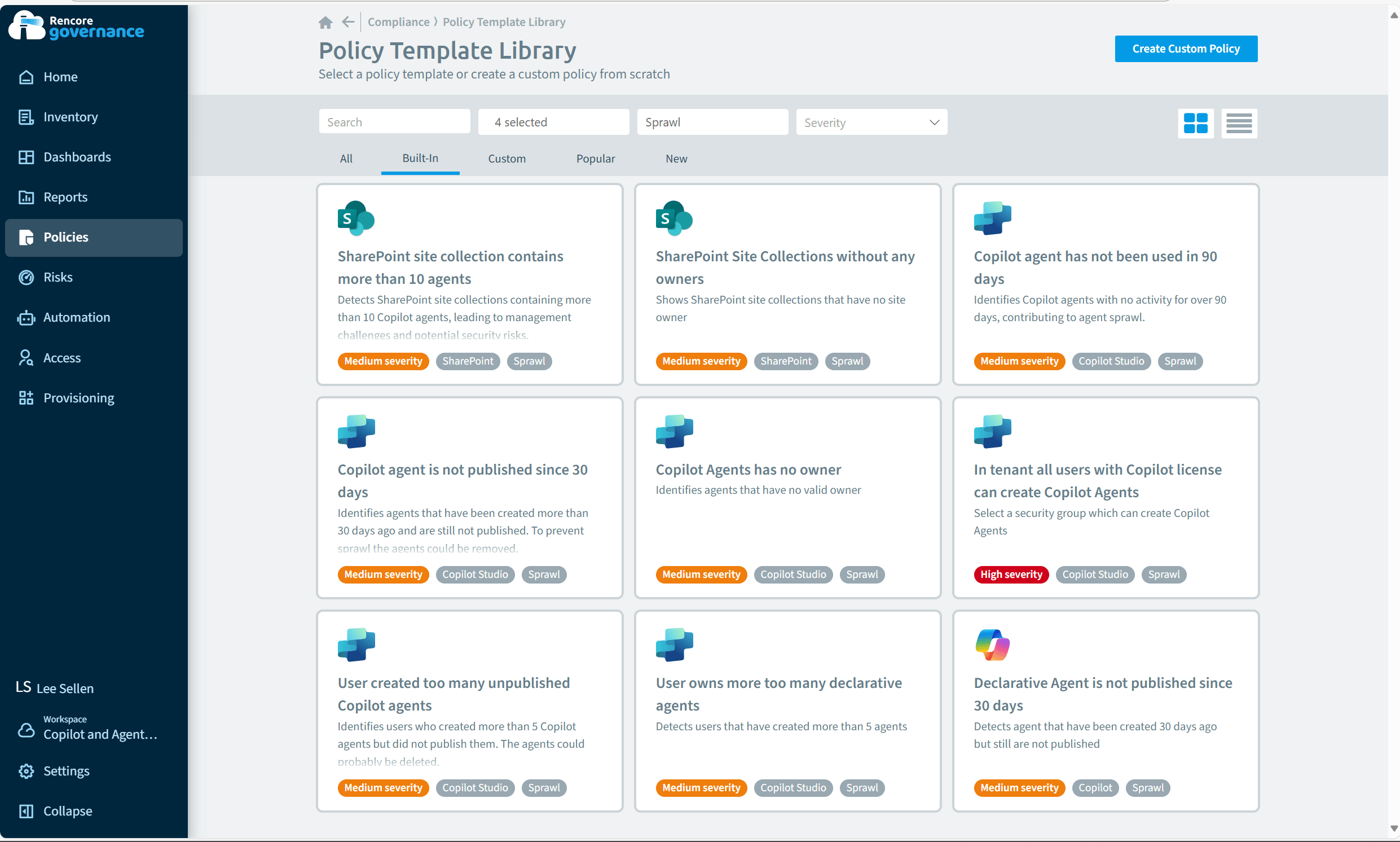

The solution (pre-built templates and flexibility): Rencore simplifies compliance by:

- Providing pre-built policy templates: Get a head start with out-of-the-box policies you can align to frameworks like GDPR and the EU AI Act, and other common compliance scenarios. This ensures that your AI initiatives stay within legal and ethical boundaries.

- Offering a flexible policy engine: Easily customize templates or build your own policies from scratch to match your organization's values and operational needs. With our advanced automation, you can build sophisticated workflows so AI initiatives align with both regulatory demands and organizational priorities.

- Empowering delegated governance: Allow business units or regional compliance officers to manage their own policies within the centralized framework, distributing the workload without sacrificing control. This collaborative approach ensures diverse stakeholders are involved in AI governance.

How Rencore closes the AI governance gap

Microsoft provides the engine; Rencore provides the guardrails, the dashboard, and the brakes. We are the essential service governance platform that enables you to roll out AI across your organization quickly, but more importantly, safely.

Here’s how we directly solve the challenges discussed:

- 360° visibility: Our comprehensive inventory gives you a complete picture of all AI agents and their dependencies across M365, Copilot, and the Power Platform. For example, you can automatically discover Copilot agents without owners or Power Automate flows connecting to external services, ensuring nothing runs unnoticed.

- Control over shadow AI: We automatically detect and help you manage the lifecycle of unauthorized or unmanaged agents, closing a major security loophole. Best practices include flagging unpublished agents older than 30 days or unused agents inactive for 90+ days and cleaning them up before they create compliance risks.

- Automated risk mitigation: Our policy automation engine works 24/7 to enforce your rules, from cleaning up stale content that could mislead AI to preventing the use of risky connectors. For instance, you can block Copilot agents connected to confidential SharePoint sites or prevent external connectors like personal Dropbox accounts from exposing sensitive data.

- Accelerated compliance: With pre-built templates, you can achieve audit-readiness in days, not months. This means you can map requirements such as logging AI activities or enforcing human oversight directly into policies instead of relying on manual checklists.

- Cost optimization: By tracking usage and adoption of tools like Copilot and identifying unused licenses and resources, we provide the data you need to optimize costs and prove ROI. For example, you can reclaim inactive Copilot licenses unused for 30+ days or stop autonomous agents running on costly scheduled triggers before they inflate bills.

- Fast time-to-value: Our solution is designed for rapid onboarding and implementing AI governance without lengthy deployments. This allows your IT and compliance teams to see results and start mitigating risks immediately. Within minutes, you can generate a full tenant inventory, detect externally shared files, and begin enforcing best-practice policies without lengthy deployments.

Finally, Rencore supports a delegated governance model that prevents IT from becoming a bottleneck. Our Microsoft Teams app brings governance tasks directly into the tools employees already use. Business units can request new workspaces or complete access reviews in Teams, while IT maintains centralized oversight and control.

This balance ensures faster processes, stronger adoption, and shared accountability across the organization. By distributing responsibility while maintaining central oversight, the Rencore Teams app brings responsible AI governance best practices into everyday workflows.

From risk to readiness: Your path to AI governance

AI offers a monumental opportunity to transform how organizations work. But without governance, the same technology can expose sensitive data, inflate costs, or trigger compliance failures under regulations like the EU AI Act.

The path forward is clear: adopt a trustworthy AI governance framework that turns risk into readiness and ensures responsible AI development. With the right controls in place, AI adoption becomes a genuine example of using AI responsibly and a genuine driver of business value.

Ready to take control of your M365 and AI environment? Start governing smarter with Rencore!

Frequently asked questions (FAQ)

What is AI governance?

AI governance is the complete system of rules, processes, and tools an organization uses to ensure that artificial intelligence is used in a secure, compliant, ethical, and responsible manner. It covers everything from data access and model transparency to user policies and regulatory adherence.

Does Microsoft provide AI governance tools?

Yes, Microsoft Purview supports data governance by classifying and protecting document information. However, it lacks a comprehensive service governance layer to manage the context: services, permissions, containers, and AI agents. Rencore delivers this essential contextual governance.

What is the EU AI Act, and when does it apply?

The EU AI Act, adopted in June 2024 and in force since August 2024, applies to any organization developing, selling, or using AI systems in the EU. Its staged rollout (2025–2027) introduces requirements on risk management, transparency, and human oversight.

What tools help govern Microsoft Copilot?

Governing Microsoft Copilot needs more than native controls or Purview’s data sensitivity labeling. Full governance requires preparing your data, monitoring oversharing, auditing usage, and ensuring EU AI Act compliance. Rencore Governance adds the missing visibility and control layer, making it a complete AI governance software solution for Microsoft 365.